Understanding Data Ingestion: A Comprehensive Guide

Data Ingestion is the cornerstone of effective data management and analysis. Whether you’re a seasoned data professional or just starting, grasping the concept of data ingestion is pivotal to making informed decisions. In this guide, we’ll delve into the intricate details of data ingestion, its methods, importance, and best practices, ensuring you’re equipped to handle your data efficiently.

What is Data Ingestion?

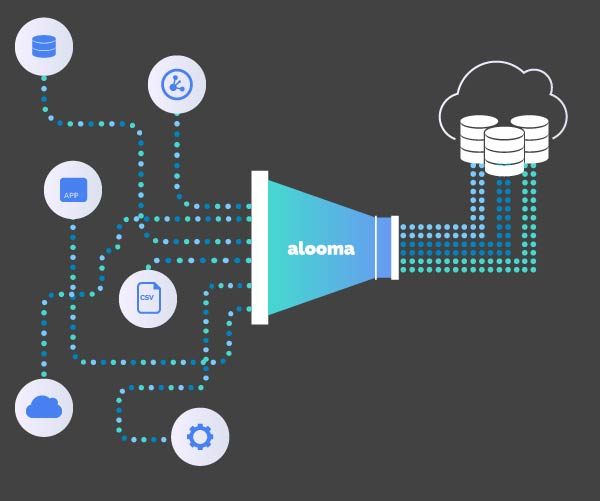

Data ingestion, in simplest terms, refers to the process of collecting, importing, and preparing data from various sources into a centralized system or database for further analysis. It’s like gathering puzzle pieces from different boxes before putting them together to reveal the big picture.

Methods of Data Ingestion

Batch Ingestion

Batch ingestion involves processing and importing data in predefined chunks or batches at scheduled intervals. This method is ideal for scenarios where data updates are not time-sensitive, such as historical analyses.

Real-time Ingestion

Real-time ingestion, also known as streaming ingestion, involves processing and transmitting data immediately as it’s generated or received. This method is crucial for applications like IoT devices and social media platforms, where up-to-the-minute insights are essential.

Importance of Data Ingestion

Effective data ingestion lays the foundation for reliable analysis and decision-making. It:

- Enables Timely Insights: Real-time data ingestion allows organizations to gain insights into current trends and respond promptly;

- Enhances Data Accuracy: Careful ingestion minimizes errors, ensuring that the data used for analysis is accurate;

- Supports Scalability: Properly ingested data is organized and manageable, even as the volume of data grows;

- Facilitates Integration: Ingestion streamlines the integration of data from disparate sources, creating a cohesive dataset.

Best Practices for Data Ingestion

Data Validation and Cleaning

Before ingestion, ensure that the data is validated, cleaned, and transformed to eliminate inconsistencies. This step prevents erroneous data from polluting your analysis.

Metadata Management

Implement a robust metadata management system to keep track of the origin, structure, and lineage of your ingested data. This enhances traceability and data governance.

Scalability Considerations

Choose an ingestion method that aligns with your organization’s scalability needs. Batch ingestion might suffice for some, while others require real-time processing.

Security Measures

Prioritize data security by encrypting sensitive data during ingestion and implementing access controls to safeguard your information.

Data Ingestion in Action: A Comparison

| Aspect | Batch Ingestion | Real-time Ingestion |

|---|---|---|

| Processing Speed | Moderate | High |

| Latency | Higher | Extremely low |

| Use Cases | Historical analysis, reports | IoT applications, social media |

| Scalability | Suitable for moderate data volumes | Handles high data influx |

The Role of Data Ingestion in Business Growth

Data ingestion acts as the gateway to data-driven decision-making. By effectively gathering and preparing data, organizations can uncover hidden patterns, identify opportunities, and mitigate risks.

Challenges in Data Ingestion

Data ingestion, while essential, is not without its challenges. Understanding these challenges helps you devise strategies to overcome them:

Data Quality Assurance

Maintaining data quality during ingestion can be tricky, especially when dealing with large datasets. Ensuring consistency, accuracy, and integrity requires robust data quality assurance processes.

Data Transformation Complexity

Transforming data into a format compatible with your analysis tools can be complex. Different data sources might use varying structures, necessitating thorough transformation processes.

Scalability and Performance

As data volumes increase, scalability becomes a concern. Your ingestion system should handle growing data without compromising performance or response times.

Real-time Ingestion Latency

Real-time ingestion aims for minimal latency, but achieving near-zero delays can be challenging. Balancing speed and accuracy is essential for meaningful real-time insights.

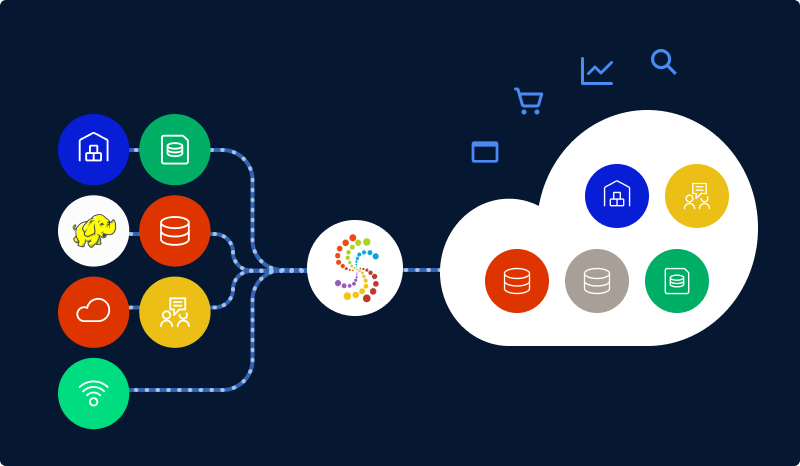

Choosing the Right Data Ingestion Tools

Selecting the appropriate data ingestion tools is pivotal for success. Several tools cater to different needs:

Apache Kafka

Ideal for real-time streaming, Apache Kafka handles high data throughput and offers robust data integration capabilities.

Apache Nifi

Known for its user-friendly interface, Apache Nifi facilitates data ingestion and movement from various sources to diverse destinations.

AWS Data Pipeline

For cloud-based environments, AWS Data Pipeline automates data movement and transformation, simplifying the ingestion process.

Talend

Talend offers a comprehensive data integration platform, enabling efficient batch and real-time data ingestion with ease.

Data Ingestion’s Future Trends

Data ingestion continues to evolve, with several trends shaping its future:

Edge Computing Integration

As edge computing gains traction, real-time data ingestion from edge devices will become more critical for timely insights.

AI-driven Automation

Artificial Intelligence (AI) will play a more significant role in automating data ingestion processes, reducing manual intervention.

Increased Data Source Variety

With the proliferation of IoT and multimedia data, ingestion methods will need to adapt to handle diverse data sources effectively.

Enhanced Data Security

The focus on data security will intensify, leading to the development of more secure data ingestion methods and protocols.

Case Study: Airbnb’s Data Ingestion Strategy

Airbnb, a leader in the travel industry, relies heavily on data for its operations. The company uses a combination of real-time and batch data ingestion to monitor user behavior, analyze trends, and enhance user experiences.

By employing a versatile data ingestion strategy, Airbnb ensures that it can capture and utilize data from various sources, providing valuable insights for business growth.

Data Ingestion vs. ETL: Understanding the Differences

While data ingestion and Extract, Transform, Load (ETL) share similarities, they serve distinct purposes:

Data Ingestion

Data ingestion focuses on gathering and importing raw data from source systems into a centralized repository. It’s the initial step in the data processing pipeline, preparing data for further analysis.

ETL (Extract, Transform, Load)

ETL involves a more comprehensive process. Data is extracted from various sources, then transformed to fit the target system’s requirements before being loaded into a data warehouse. ETL encompasses data cleansing, enrichment, and aggregation.

| Aspect | Data Ingestion | ETL |

|---|---|---|

| Primary Goal | Import raw data | Prepare data for analysis |

| Transformation | Minimal | Extensive |

| Data Source Type | Raw data from source systems | Structured and semi-structured data |

Conclusion

In the dynamic landscape of data analysis, mastering data ingestion is non-negotiable. It empowers you to harness the true potential of your data, turning raw information into actionable insights that drive growth and innovation.

Leave a Reply